Performance Test Validations

To validate your application’s performance:

-

Learn testing prerequisites.

-

Follow best practices.

-

Execute performance testing.

Performance Test Validation Prerequisites

Before executing performance testing:

-

Confirm that your Mule app and its functions work as expected because a wrong flow can give false-positive data.

-

Establish performance test criteria by asking yourself the following questions:

-

What are the expected average and peak workloads?

-

What specific need does your use case address?:

-

Throughput, when handling a large volume of transactions is a high priority.

-

Response time or latency, if spikes in activity negatively affect user experience.

-

Concurrency, if it is necessary to support a large number of users connecting at the same time.

-

Managing large messages, when the application is transferring, caching, storing, or processing a payload bigger than 1 MB.

-

-

What is the minimum acceptable throughput?

-

What is the maximum acceptable response time?

-

Performance Test Validations Best Practices

Testing best practices require you to consider your testing environment, your testing tools, component isolation, and representative workloads.

Use a Controlled Environment

Use a self-controlled environment to obtain repeatable, reliable, and consistent data:

-

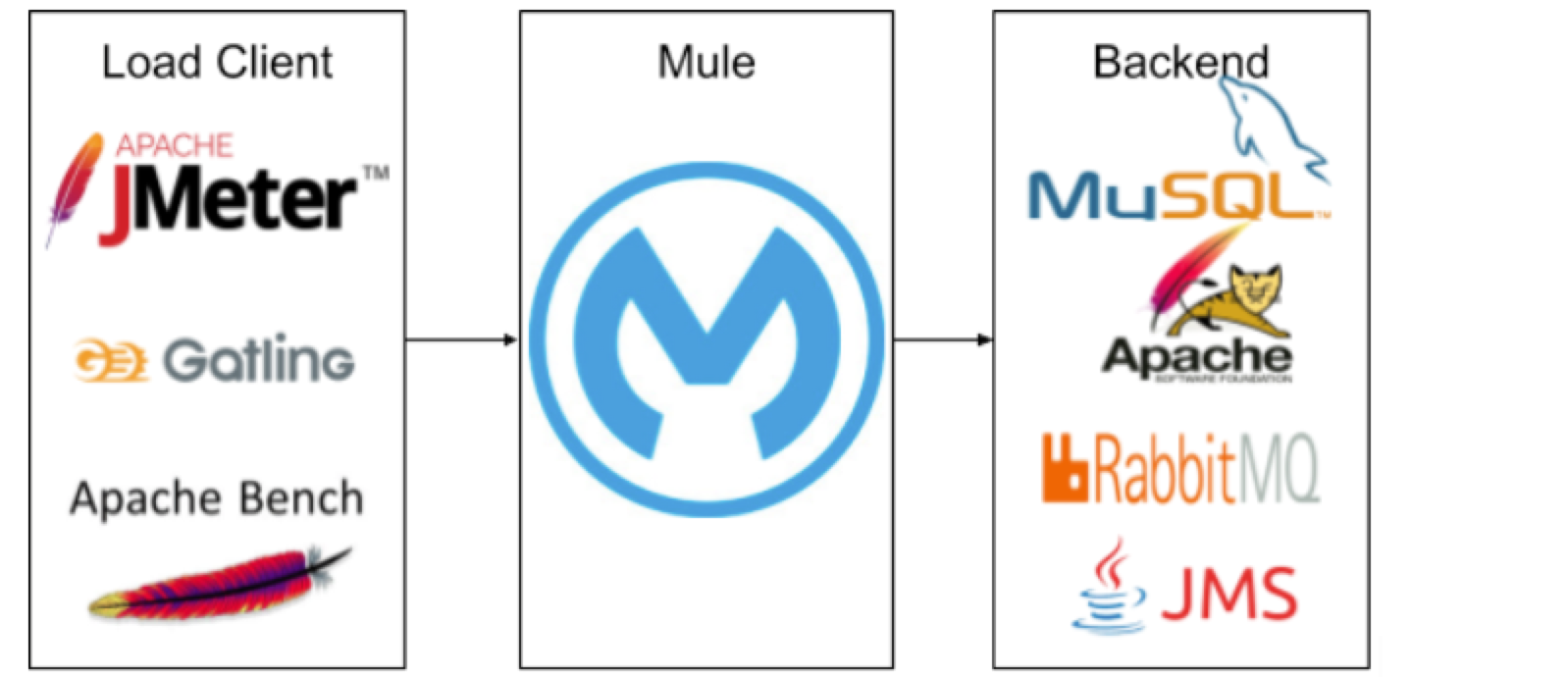

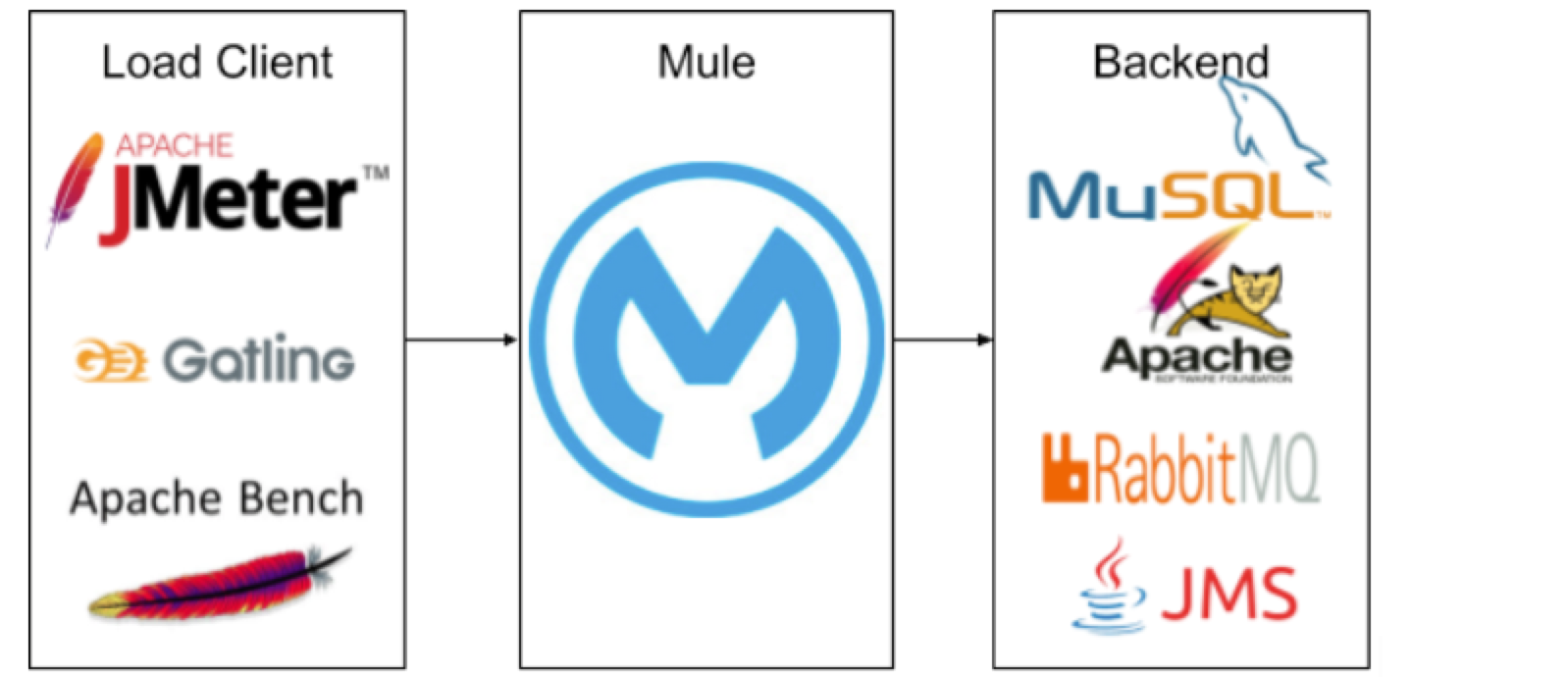

Use an infrastructure made by a triplet:

-

Use a dedicated host for the Mule application to isolate app information, preventing other running processes from interfering with the results in case of on-premises tests.

-

Use a separate dedicated host for running load client tools that can drive the load without becoming a bottleneck.

-

Use a separate dedicated host to run backend services like the database, Tomcat, JMS, ActiveMQ, and so on. Test using the same host that the application uses in production.

-

For on-premises tests, run the load client, runtime server, and backend in the same Anypoint Virtual Private Cloud (Anypoint VPC) to decrease network latency.

-

For CloudHub tests, run the load client and backend server on dedicated machine that is separate from but in the same region as the CloudHub worker to decrease network latency.

-

-

Use a wired stable network.

-

Don’t use your laptop because it may outperform the same application deployed to a customer’s environment, particularly if it is virtualized.

Choose the Right Components Tools

The components used for testing can make a big difference for your use case. To get realistic performance data, use the most suitable load injector and a backend service configured with the same setup as your customer environment. Then choose the load-test tool that best matches your use case. The MuleSoft Performance team uses Apache JMeter, but you can use any of many available enterprise and open-source load test tools for a variety of different implementations, such as single-threaded, multithreaded, nonblocking, and so on. After you choose the right testing tools and begin to use them, continue to use them so that you obtain consistent test results. If you decide that the tools you have are not the right ones for your use case and you change them, you must execute all of the tests again.

Isolate the Components of the Test

Because performance is an iterative process during which any of many variables can negatively affect the results, it is important that you be able to identify and isolate the variables causing a problem. To do this, split your application into several small pieces, each targeting a specific component or connector in the flow. This helps you to identify the bottlenecks and direct optimization efforts towards a specific flow element.

Use Representative Workloads

When you test your application, it is important to imitate realistic customer use cases. To do so, gather information about the following characteristics of actual customer scenarios:

-

User behavior

-

Workload (number of virtual users, number of messages, and so on)

-

Payloads (types, sizes and complexity)

Using this information, you can then create different repeatable, common, and high-load scenarios that test the performance limits of Mule without causing failure. To imitate actual customer behavior, introduce variables such as think time between requests and latency to the backend service.

Execute Performance Test Validations

Before you start the test execution, ideally remove the ramp-up, tear down, and error percentage settings that can affect the throughput calculation results. Considering the best practices from the previous section, execute the testing:

-

Apply performance testing to spot and validate the bottlenecks of the applications.

-

Use an iterative approach for tuning issues that you find:

-

For a CloudHub application, tune Mule flows and configurations

-

For on-premises applications:

-

Tune Mule flows and configurations.

-

Tune the JVM, GC, and so on.

-

Tune operating system variables.

-

-

-

Execute the test several times for trustworthy results. Make one Tuning Recommendation change at a time and then retest.

-

Check the test results.